INDI Library v2.0.7 is Released (01 Apr 2024)

Bi-monthly release with minor bug fixes and improvements

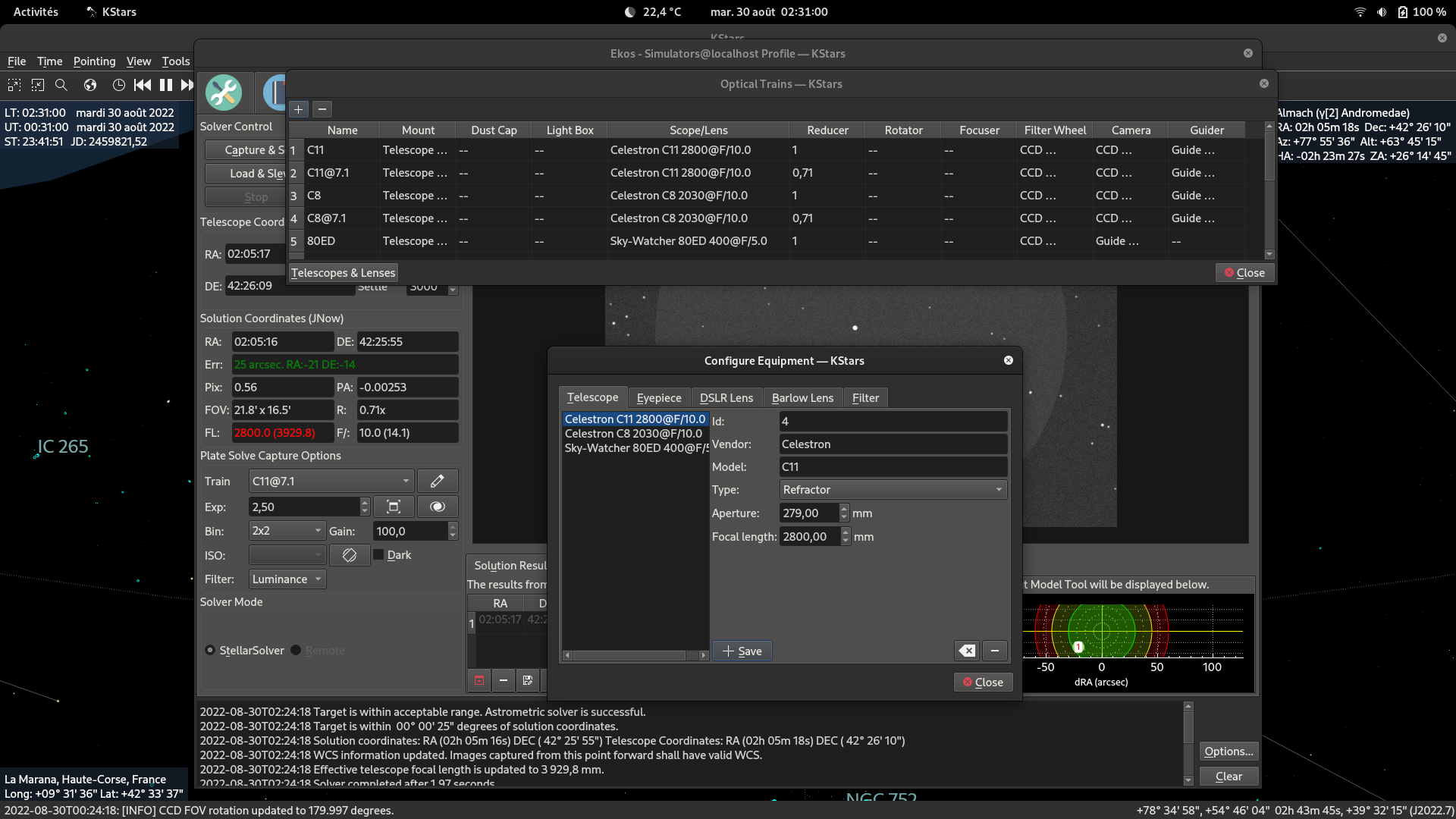

Ekos Optical Trains

- Jasem Mutlaq

-

Topic Author

Topic Author

- Online

- Administrator

-

Ekos Optical Trains was created by Jasem Mutlaq

Just like Port Selector tool, this would popup on the first time a profile is run. After all devices are connected, you are asked to configure Optical Train(s). You assign a label to each train. The optical trains are tied to the profile. You then configure each train, and drag/drop devices into their respective places (slots) in the train. Then in the future, you no longer select camera/filter/focuser/guide..etc, you simply select which train to use.

Each optical train is tied to an equipment profile, so if you create a new equipment profile, you need to configure the OT(s) for this particular setup. Things can now pave the way for multi-camera setup, not just 2, but N-camera setup would be possible. Of course, this could get very complex and interesting very quickly.

Suppose for example you create a setup like this:

OT1: Scope1 -> Reducer -> Focuser1 -> OAG1 -> FW1 -> Camera1

OT2: Scope2 -> FW2 -> Camera2

Then you create a sequence to capture 5x frames for OTA1, and 10x frames for OT2. Guide module is set to use OTA1 (since OAG1 is defined there). You slew the mount to a target, and begin capturing. Ekos should now trigger both OTA1 + OTA2 camera to capture at the same time. However, in order to sync everything up, we need to be careful of:

1. Suppose we need to change FW1 filter. We might need to adjust focuser for some offset, and this would cause OAG1 to be suspended. This would ruin images captured in OTA2, so we either wait until OTA2 camera finishes the next frame, or we abort OTA2 capture, finish the focus+filterwheel change.

2. Meridian flip would have to wait for both camera to finish.

3. Dithering cannot start unless both cameras complete the next frame. Basically anything affecting the mount (slew/guide/flip) would need for all N-cameras to complete their current capture.

4. ...etc

Please share your thoughts and concerns on this idea so we can come up with a solid architecture.

Please Log in or Create an account to join the conversation.

- Wolfgang Reissenberger

-

- Offline

- Moderator

-

- Posts: 1187

- Thank you received: 370

Replied by Wolfgang Reissenberger on topic Ekos Optical Trains

Currently, I need to stop the profile (and subsequently the INDI devices) to change the values - which is technically unnecessary.

With an optical train that I could configure and select AFTER having started the INDI devices is the main advantage making EKOS even more handsome for me. Having more than one optical train on the same rig is currently too complex for me.

Cheers

Wolfgang

Please Log in or Create an account to join the conversation.

Replied by Eric on topic Re:Ekos Optical Trains

With this approach, some Ekos modules may modify device settings, some others may be restricted to listen to events. The config would be responsible for managing these access restrictions, or, more simply, to inform each instantiated Ekos module whether it may write or only read the device settings through the INDI drivers. This information would be defined when creating the config.

Because we now possibly have multiple instances of Ekos modules, we require separable settings sets. The way we store Ekos settings now is in the KStars database, and there is only one instance of each setting item. We need to move on to individual settings sheets for each Ekos module. We could continue to store them in the KStars database, but it would be more interesting to serialise them into files (and make sure we can export the current settings properly). Users should be able to save and load settings for each target observation. Further on, we could offer a library where to store those settings, and embed them inside Scheduler plans, with the necessary UI helpers to ease manipulations.

Then comes the question of state, so that alignment doesn't take place during a meridian flip, with guiding active, all this while trying to finish focusing. Currently the state is shared with notifications between Ekos modules, which then proceed, suspend or abort. With multiple module instances, the question become more complex. Where to put it for reference by others is something we need to think about precisely.

-Eric

Please Log in or Create an account to join the conversation.

- Wolfgang Reissenberger

-

- Offline

- Moderator

-

- Posts: 1187

- Thank you received: 370

Replied by Wolfgang Reissenberger on topic Re:Ekos Optical Trains

Do we want to stick with this - with the consequence that in the presence of two imaging cameras for example we need two Capture tabs? Or shall we separate the UI from the task and introduce separate task models - maybe with their own persistence layer as Eric described above?

Please Log in or Create an account to join the conversation.

- Wolfgang Reissenberger

-

- Offline

- Moderator

-

- Posts: 1187

- Thank you received: 370

Replied by Wolfgang Reissenberger on topic Re:Ekos Optical Trains

Please Log in or Create an account to join the conversation.

- Thomas Mason

-

- Offline

- Elite Member

-

- Posts: 200

- Thank you received: 18

Replied by Thomas Mason on topic Re:Ekos Optical Trains

Please Log in or Create an account to join the conversation.

Replied by Hans on topic Ekos Optical Trains

I would not want to make the assumption that having OAG1 in OT1 implies that that is the guider to use, or that that OAG port even has a guide camera attached. What if OT2 also has a guide camera somewhere ? Which one to use then ? I'd like to see the guide camera in the optical train explicitely listed as well. The choice which camera to use should still be with the user as we cannot deduce which one it is. Maybe the user wants to guide with the main camera of OT2. Also what if the guide camera is physically there but not to be used by INDI as PHD2 accesses it natively ? (this is what I actually use). Same for my SX-AO unit, it's there in the optical train but not to be accessed by INDI as PHD2 controls it natively.

Then on the idea of two imaging cameras, I like it

I wonder what the purpose to INDI/EKOS is of something like a reducer in the optical train, it could be used to calculate the new focal length of course but then all spacing rings etc need to be added too ! This would be awesome to have of course.

OT-N support is very interesting, and it will be difficult to implement right without impacting N==1 stability which is already quite a challenge today

-- Hans

Please Log in or Create an account to join the conversation.

- Jasem Mutlaq

-

Topic Author

Topic Author

- Online

- Administrator

-

Replied by Jasem Mutlaq on topic Ekos Optical Trains

1. Better equipment manager that includes DSLR lens and focal reducers (I'm working on this).

2. Optical Train Editor + Backend databasee.

3. Module settings manager?

4. Decouple state from GUI for each module?

Please Log in or Create an account to join the conversation.

- Wolfgang Reissenberger

-

- Offline

- Moderator

-

- Posts: 1187

- Thank you received: 370

Replied by Wolfgang Reissenberger on topic Ekos Optical Trains

Please Log in or Create an account to join the conversation.

Replied by Grimaldi on topic Ekos Optical Trains

good idea, but similar to what Eric and Wolfgang pointed out, I believe it can only be a first step: Take e.g. FlipFlats/FlipDark or a Flat position (light panel at a fixed Alt/Az position), a roll-on-roll-off roof or a dome. Then add constraints like certain positions that need to be avoided, a position the scope must be in to safely close the observation hut, an observatory horizon with a few trees or a weather station and imagine now scheduling an observation list over a few nights and reacting to changing weather conditions.

This seems like that hardcoding collaboration between modules as it is now will not be a good solution in the long run, as we cannot predict nor code every automation / interaction between modules our users will want to have.

The first question therefore is: How far will Ekos/Indi want to go? Does it want to be a solution, that is capable (in the long run), to run a remote observatory or not? If not, what exactly will be out of scope and how will it interface with software capable to do the out-of-scope stuff?

Let me assume in the following, that Ekos/indi will want to go quite far in this journey. How could we achieve this?

First step as Wolfgang said, will be refactoring the existing code to separate UI and state more clearly. For example I can only talk about the platesolving module right now, but this one really is orchestrating many of the other modules, like take picture, solve, slew to position, and doing all this in order to execute a polar align. All of this is done through code that reacts on events and tries to keep track of the current state in variables. Many event processing routines consist of lots and lots of if/then/else how to react to the event given current state. For me being new to the code base it is hard to reason about and is hard to change. It is also completely unclear, which events to expect when and in what order, with-out reading that other module‘s code. Here a Strategy and/or visitor pattern should be applied (different ones for Load&Slew, Take&Sync, PolarAlign, AimAfterMeridianFlip etc), which make the State and how to react on events explicit. This is a set of orchestrator classes for each module. This will hopefully also make it also easier to get contributors to the codebase.

As a parallel step, I believe a different way of collaboration between modules will be necessary: While currently this is some form of Choreography (looking at others, then doing the right thing), I‘d introduce an Orchestrator class (or set of classes) for the whole system (including the optical train), that is responsible to execute a single job. This should allow to reason about the whole system and avoid regressions during the refactoring of step one. Then as more optical trains come in, relax this and have independent but collaborating orchestrator classes for this. This hopefully will also get rid of some more recent bugs where this collaboration fails.

If we want to keep the choreography, as an alternative a Blackboard could be used, where all modules publish state, so that other modules can be aware of what‘s happening and can veto, vote or otherwise collaborate using some global state. (I don‘t have experience with such an architecture, so I don‘t know, if this really makes things easier or not). I believe this needed to be thread safe.

Then the next step could be to move that collaboration sooner or later into some DSL (domain specific language) or a rule engine, so that it’ll become configuration. Then the task is to increase the coverage of that, to avoid hardcoding it and open up for more customizations and support less likely combinations of products more easily.

One thing, that I‘d not change is using indi as an abstraction layer for instruments and as a means of separating compute.

Hope this helps,

Jens

Please Log in or Create an account to join the conversation.

- Jasem Mutlaq

-

Topic Author

Topic Author

- Online

- Administrator

-

Replied by Jasem Mutlaq on topic Ekos Optical Trains

Please Log in or Create an account to join the conversation.

Replied by Marc on topic Ekos Optical Trains

I played with the new functions, only with sims because of cloudy night.

Here's what I have found :

The fields Pix, Fov, Fl and F/ in the Alignment tab seem wrong :

- Considering the focal length of 2800 with a 0.71 reducer the displayed FL should be 1980.

- With 3.8ù pixel size, the Pix field should be 0.39

- F/ should be 7.1

- The FOV should be 30.3'x22.7'

At least if I understand the concept

Once you have added an OT (with the "+"), you can't remove it with the "-" tab.

Could the problem with the 0x71 reducer be related to my french keyboard which refuses the period, only accepting the coma ?

(In French, the coma is used instead of the period and the period is used instead of the coma ... Don't ask

At last for this fast first little test, one suggestion:

In the planetarium, instead of the INDI driver of the mount could you display the profile? C11 or C11@7.1 in my case ...

Now, let me dream a little bit

One day, EKOS will be able to deal with several mounts and several OTs.

You just change the OT in the mount tab, and a new crossair is created and depending on which mount is selected, you send the adhoc mount where you want. Changing the profile in the align tab or in the capture tab, you drive your scopes with one single computer, watching your scopes on the same kstars: eventually with one Raspi per mount addressed by its IP, the INDI servers being chained or addressed through a HUB.

Let's take advantage of this server/client architecture which is SO superior to these things you can see on lesser OSs

Well, time to wake up Marc

Thanks for your time Jasem!

-Marc

Attachments:

Please Log in or Create an account to join the conversation.